Why Complex Systems Collapse Faster

All civilizations collapse. The challenge is how to slow it down enough to prolong our happiness.

During the first century of our era, the Roman philosopher Lucius Annaeus Seneca wrote to his friend Lucilius that life would be much happier if things would only decline as slowly as they grow. Unfortunately, as Seneca noted, “increases are of sluggish growth but the way to ruin is rapid.” We may call this universal rule the Seneca effect.

Seneca’s idea that “ruin is rapid” touches something deep in our minds. Ruin, which we may also call “collapse,” is a feature of our world. We experience it with our health, our job, our family, our investments. We know that when ruin comes, it is unpredictable, rapid, destructive, and spectacular. And it seems to be impossible to stop until everything that can be destroyed is destroyed.

The same is true of civilizations. Not one in history has lasted forever: Why should ours be an exception? Surely you’ve heard of the climatic “tipping points,” which mark, for example, the start of the collapse of Earth’s climate system. The result in this case might be to propel us to a different planet where it is not clear that humankind could survive. It is hard to imagine a more complete kind of ruin.

So, can we avoid collapse, or at least reduce its damage? That generates another question: What causes collapse in the first place? At the time of Seneca, people were happy just to note that collapses do, in fact, occur. But today we have robust scientific models called “complex systems.” Here is a picture showing the typical behavior of a collapsing system, calculated using a simple mathematical model (see Figure 1).

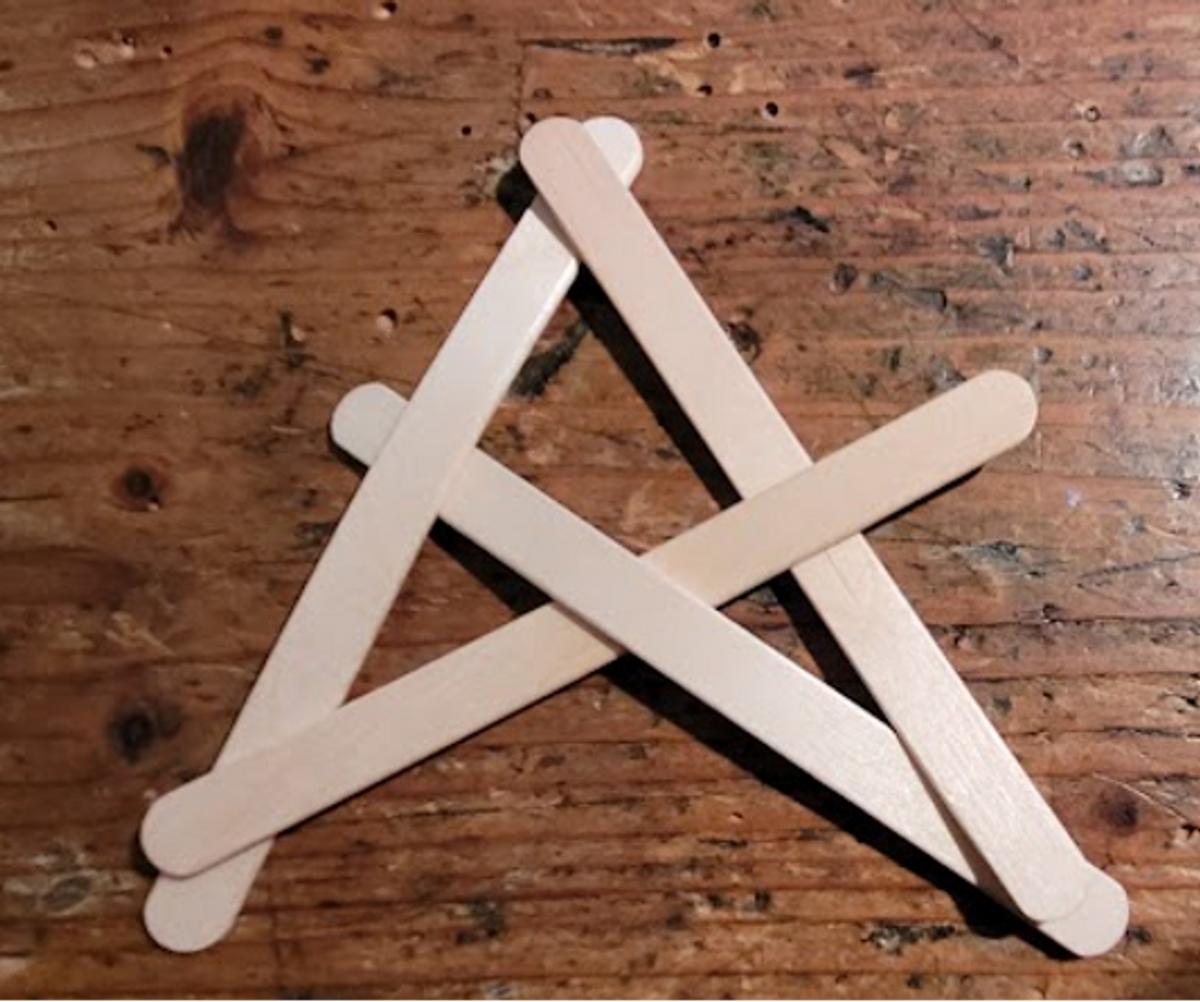

But we can also make simple, nonmathematical models of collapse. Look up the term “stick bomb,” and you’ll find many examples of geometric structures made from popsicle sticks that appear stable—until you shift one of the sticks away from the others. Then the whole thing suddenly disappears in a small explosion of flying sticks. A harmless kind of Seneca effect.

A stick structure is a very simple thing, but it embodies the essence of the mechanism of collapse because it is a network. A network is composed of many elements, or “nodes,” connected to one another by “links.” In a stick bomb, the nodes are the points where the sticks touch each other, while the sticks themselves are the links. When you remove one of the links, you weaken the nearby nodes. When one node lets go of a link, the collapse moves to other nodes. This is sometimes called a “cascading failure,” another name for the Seneca effect.

It is a curious feature of cascading failures that all the elements in the system collaborate to bring the system down entirely. But it is a characteristic of networks that small perturbations are rapidly transmitted to the entire structure. Recall the proverbial straw that broke the camel’s back. A straw is a very small thing, it weighs almost nothing, but it can bring down a thousand-pound camel. Or consider how small cracks can grow until they eventually destroy the largest structures. That’s how failures occur.

It turns out that large networks are not any less prone to collapse than small ones—in fact, they might actually be more sensitive to perturbations. Think of ancient Rome: When Seneca wrote that “ruin is rapid,” he may well have noticed the first cracks in the stupendous structure of the empire. A few centuries later, it disappeared. Its ruin was rapid, considering that Roman civilization had been standing for more than a thousand years.

The collapse of the Roman Empire has fascinated historians for centuries. Why did it happen? Many different explanations have been proposed, but perhaps we can get closer to the right one by noting that the Roman Empire was a network. It was kept together by a large number of connections among people who exchanged food, goods, services, and more. Most of these connections had a common element: They were created by money. In turn, Roman money was based on precious metals—silver and gold that the Romans mined in northern Spain. As long as the mines were producing, the Romans could mint coins to pay their soldiers, bureaucrats, workers, and artisans.

Yet there was a big problem with this system: Mining was expensive. It was done mainly by slaves, and even though the slaves were not paid, they still required food, shelter, and tools. As mining went on, the “easy” veins of precious metals were exhausted, forcing miners to dig deeper and for less concentrated veins. Maintaining the same amount of production, then, required increasing effort over time: more miners, more food, more shelter, more tools. In any case, there was a limit to the depth that mines could reach. Already in the time of Seneca, the first century CE, the problem of mine depletion may have been felt. By the third century CE, Roman mining production collapsed. At that point, gold started vanishing from the empire; most of the gold that was in circulation went to China to pay for luxury items, like silk. Eventually, no gold meant no money. No money meant the troops couldn’t be paid. And if the troops couldn’t be paid, the barbarians invading the empire couldn’t be repelled. And how to pay the bureaucrats, the judges, the police, and the maintenance of roads? No wonder the empire collapsed. As Seneca had written: Ruin is rapid.

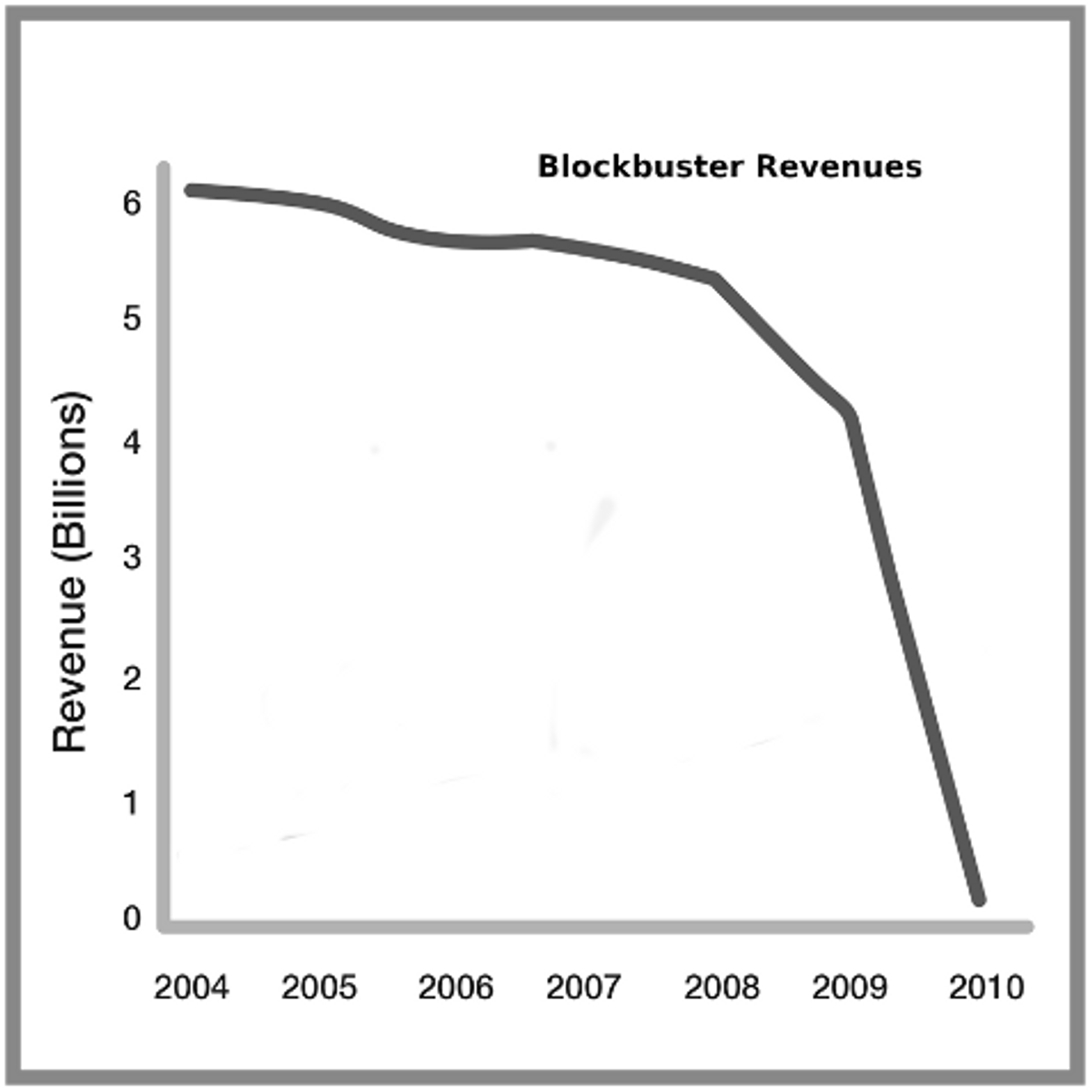

Let’s consider another example, one closer to our times. You might remember Blockbuster, the American video rental company that for some time was the largest of its kind in the world, with around 65 million registered customers at its peak. Blockbuster, too, was a network: one formed by the links that the company had with its customers. But by the early 2000s, those links started breaking down: The company was losing customers because its business model had become obsolete. Blockbuster reacted by charging its remaining customers more. That led to more customers disappearing—a vicious cycle of link-snapping that quickly ended in bankruptcy in 2010. Blockbuster’s revenues followed a typical Seneca curve, or cliff (see Figure 3).

What about our civilization? Will it collapse, too? We don’t depend on gold, like the Roman Empire, nor on customers, like Blockbuster. But we do depend on critical nodes that make up a vast network: the energy production system, the financial system, the climate system, and many more. If any one of these subsystems defaults, it could send out reverberations that generate a cascade of failures, bringing the whole system down. We’ve seen ominous shocks just in the last century: the world wars, the Great Depression, the 1973 oil crisis, the 2008 financial crisis, the COVID-19 pandemic. What will be the next shock? When it arrives, our civilization could quickly become just another paragraph in future history books. And if something goes really wrong with the Earth’s climate—well, there may not be anyone left to read them.

I’m aware that all this sounds a bit pessimistic. But planning for the worst possible scenario is not pessimistic—it is simply prudent. It is what you do when you put on your seat belt before driving a car. So, let’s ask ourselves practical questions: Can anything be done to avoid collapses? Or at least to reduce the damage they cause?

Perhaps the first person who reasoned in scientific terms about how to avoid collapses was the American scientist Jay Forrester (1918-2016). He was one of the main developers of the field known today as “system science.” To him we owe the idea that when people try to avoid collapse, they usually take actions that worsen the situation. Forrester described this tendency as “pulling the levers in the wrong direction.”

Forrester’s idea is powerful, and helps explain why things go bad so fast, so often. Consider the ancient Romans again. They tried to stop the collapse of their empire by putting all the resources they had into keeping their military as strong as possible. It seemed like a good idea: You need a strong army to stop the barbarian invasions. But to pay the soldiers they had to raise taxes, and they didn’t realize that doing so would hemorrhage the very system they were trying to keep alive. The Romans pulled the levers in the wrong direction, as is typical: Empires tend to bankrupt themselves with excessive military expenses. In more recent times, it happened to the Soviet Union; it may well be happening in parts of the Western world today.

How about Blockbuster? The managers of the company understood that their customer base was eroding, so they spent a lot of money on advertising. That worked for a while, but it left them with less money for technology improvements. Spending dwindling resources on advertising the old product rather than upgrading to a new one was pulling the levers in the wrong direction.

One final example: the “miracle” of shale oil. You probably heard some years ago about “peak oil,” the supposed tipping point that would lead to the irreversible decline of global oil production. According to some models, the gradual depletion of oil reserves should have led to a maximum production level (the “peak”) at some moment during the first decade of the 21st century, perhaps causing the ruin of our entire civilization. Needless to say, it didn’t happen: Oil production continued to increase. From this, some concluded that “peak oil” will never occur, a bit of an overinterpretation. Oil remains, after all, a finite resource. But apart from this, the reason global oil production has continued to increase is that a lot of money was poured into the extraction of “shale oil” in the United States using hydraulic fracking technology. This kind of oil is expensive to produce, but it did avert collapse, for now. Yet depletion is already a concern for the shale oil industry now, too, and a collapse in the production of liquid fuels risks occurring very, very rapidly: another Seneca cliff. Another example of pulling the levers in the wrong direction. The resources invested in developing and producing shale oil would probably have been better spent on renewables or energy efficiency.

Surely you can think of more examples of Forrester’s effect from your own personal experience. So, we know what not to do when facing collapse: Don’t pull the levers in the wrong direction. But what should we do, then? There are no fixed rules, but doing the right thing often involves counterintuitive decision-making.

One possibility is to circumscribe the damage in order to save most of the structure: If you divide a system into small, independent subsystems, you can prevent the cascade of failures from propagating over the network; if the subsystems do not communicate with each other, then the failure cannot move so easily from one to another. It is a well-known strategy in materials science: “Composite” materials are stronger than homogeneous ones because their internal boundaries can stop a crack from expanding. In economic systems, we say that “small is beautiful.” In social systems, the movement called “transition towns” is based on the idea that a village is more resilient than a whole state. And a federation of smaller states may be more flexible in facing difficulties and absorbing shocks than a larger, centralized state.

Another idea that may help avoid collapse is to strive to maintain a certain balance among the elements of the system. Here, we can learn something from Elinor Ostrom, the first woman to receive the Nobel Prize in economics. When Ostrom examined the decision-making methods of successful social systems, she found that they operate as a network in which all the decision-makers in a certain sector are also stakeholders in that sector, and that decisions are always made by negotiated agreements. In other words, you should avoid rigid, military-style management—in which the decision-makers do not necessarily suffer personal consequences from their decisions—because it is especially prone to collapse. Perhaps Ostrom’s advice is not so different from what the ancient Chinese philosopher Lao Tzu had said in the Tao Te Ching: “rigidity leads to death, flexibility results in survival.”

In the end, it may well be that some collapses are unavoidable—it is the way the universe gets rid of old things to replace them with new ones. So we have to just accept that things may go wrong, as they often do. The ancient Stoic philosophers, including Seneca, concluded that when something bad happens to you, you should try to accept it—to go with the flow—and instead cultivate your personal virtue, which no one can take away from you. We have another small gem of wisdom from Seneca in a letter he wrote to his mother, Helvia: “No man loses anything by the frowns of Fortune unless he has been deceived by her smiles.” If you are not deluded into thinking that the good times will last forever, in other words, you may simply come to terms with decline and discover the ways in which life is still possible even after collapse.

Ugo Bardi is a former professor of physical chemistry at the University of Florence, Italy. He is a full member of the Club of Rome, an international organization dedicated to promoting a clean and prosperous world for all humankind, and the author, among other books, of The Seneca Effect (2017), Before the Collapse (2019), and The Empty Sea (2021). He has specific experience studying pollutants.