The Nanny vs. The Nanny State

Only one thing can save us from predatory AIs: our own data

Valerie Macon/AFP via Getty Images

Valerie Macon/AFP via Getty Images

Valerie Macon/AFP via Getty Images

As Hollywood recycles comic book remakes with funding from China, the once-great cultural capital appears to be in a state of steep decline. But in one area, at least, the movie industry is offering a bold and inspiring vision for the future. The writers and actors who went on strike to prevent studio owners from using artificial intelligence (AI) to exploit and expropriate their work are on the front lines of a new data war triggered by the explosive growth of AI. This past July, Fran Drescher, former star of the popular sitcom The Nanny, and now president of the SAG-AFTRA union, described the stakes: “If we don’t stand tall right now, we are all going to be in trouble, we are all going to be in jeopardy of being replaced by machines.”

Drescher is right. Across the U.S. and the global economy, corporations are strip mining people’s data and then using it to train the AI systems that will put those very people out of work. Two days before this article was published, the Writers Guild of America reached a tentative agreement with the studios for a new three-year deal. According to Variety, “details of language around the use of generative AI in content production was one of the last items that the sides worked on before closing the pact.” With the writers bringing their five-month strike to a close, the actors guild could soon reach its own deal. While the Hollywood shutdown has undoubtedly been painful for many of the people involved, we believe that workers who do not manage to secure protections against AI will be in for far worse outcomes. Doomsday scenarios about fully sentient AI—aside from being good marketing gimmicks for the tech industry—distract from the real and immediate danger posed by the technology: Not the rise of terminator robots but that tens or hundreds of millions of people will suddenly lose their jobs.

Though AI technologies have been around for a while, they have only recently begun to work in a way that everyday people can understand. ChatGPT isn’t the super intelligence of science fiction, but it delivers results on par with the capabilities of a well-educated or skilled human being across various tasks. As a result, thousands of new AI companies launched over the last couple of years have collectively generated billions of unique monthly visitors to their sites. And as the immense power of Facebook, Twitter, and other social networking sites has shown, platforms capable of generating billions of visitors are extraordinarily valuable.

Both stand-alone AIs like ChatGPT, and those built into other applications where they can do everything from automate medical screenings to “socialize” with lonely people, are poised to become the most valuable technological artifacts ever built—potentially worth tens of trillions of dollars over the next decades. The problem is that AIs are data gluttons. Because there’s a strong correlation between the amount of data used to train an AI and the quality of its outputs (large quantities of data even allow you to ignore many quality issues), the companies running them need access to vast sums that are constantly replenished.

Until recently, it was relatively easy to acquire vast quantities of data. AI developers and platforms acted like prospectors in an unregulated frontier, hauling publicly available information from the open internet with abandon. Untold reams of data were simply screen-scraped, downloaded from questionable sources, or acquired under academic access. Now that AIs have proven their commercial worth, however, with trillions in potential revenue on the line, gates are being put up at a rapid clip.

Already, Twitter, Reddit, and other big sites are restricting access to the information collected from their users to prevent companies from harvesting and then monetizing that data. Twitter introduced a rate limit, setting a cap on the number of posts that users can see per day. These moves have triggered a backlash from users who view such policies as intrusive and shortsighted. However, the truth is that sites that don’t build such barriers are inviting other companies to treat them as a free resource and training ground. Even the AI sites themselves, from OpenAI to Midjourney, are putting limits in place to prevent their products from being used to train other AIs. Tech companies are also signing small data licensing deals as part of a strategy to build a legal case showing that the unlicensed use of their data damages their business. Finally, there has been a growing number of copyright suits made against the larger AI companies by copyright holders, from big firms like Getty Images to individuals like comedian Sarah Silverman. In her lawsuit, Silverman’s attorneys contend that OpenAI and Meta acquired her book—along with countless others—through illegal torrenting sites and then used her intellectual property to train their AI models.

The abruptness of technological change and the reluctance to prepare for this eventuality (one of the authors of this article testified before the U.S. Senate a few years ago on ways to avoid this mess) is creating a slow-moving disaster with cascading downsides for America’s social and economic order. Especially given that past methods of protecting intellectual property, such as copyrights, no longer offer effective protection. The problem is that the large language learning model (LLM) AIs like ChatGPT and its competitors aren’t making a copy; they are “learning” from preexisting data in much the same way that humans learn from reading a book, listening to music, or seeing a picture. Furthermore, it’s not considered a copyright infringement even if the AI uses a temporary copy of copyrighted data (image, video, text, voice, etc.) to train an AI. It’s precisely because copyright and licensing claims are rendered so weak by this new technology that firms are turning to crude access restrictions (blocking APIs and rate limits) and licensing agreements (if you access this data, you will abide by our rules on its use and pay us) to protect data obtained from their users.

If current trends continue, the tension between AI’s insatiable demand for data and the proprietary interests of current data businesses will turn the internet into a feudal landscape.

Raising the drawbridge on data access will make it increasingly difficult for new AI firms to acquire the data needed to train high-quality AIs. Such a scenario will only concentrate more power and wealth in the hands of the current information barons. Early entrants to the AI field, already trained on what the open web had on offer, will find themselves well-protected against competition, allowing them to charge monopoly rents. As the web becomes sclerotic, AI developers will turn to niche applications and sources, paying to acquire the data people produce at work or cutting deals to extract it from people when they enter public spaces.

If current trends continue, the tension between AI’s insatiable demand for data and the proprietary interests of current data businesses will turn the internet into a feudal landscape. Every site and network with the power to do so will put up walls to prevent bots from “looting” their estates, and will only grant access through licensing agreements. Finding and reporting on the abuses in these walled gardens will become harder as sites cut off public access.

The biggest losers in this new data grab won’t be the companies involved. It will be the individuals who produce the data that make AIs valuable and yet will be cut off from its profits. While the big social networks can erect barriers around their platforms, the average internet user lacks the technical means to prevent their public behaviors from being collected and fed to AIs. Without some kind of data ownership rights, nearly all of us online will be put into the business of selling ourselves. Only a small number will receive any economic compensation while, for the great majority of people, “payment” from the AI companies will come in the form of access to their platforms. You won’t really own anything but you’ll get the privilege of squatting in the metaverse.

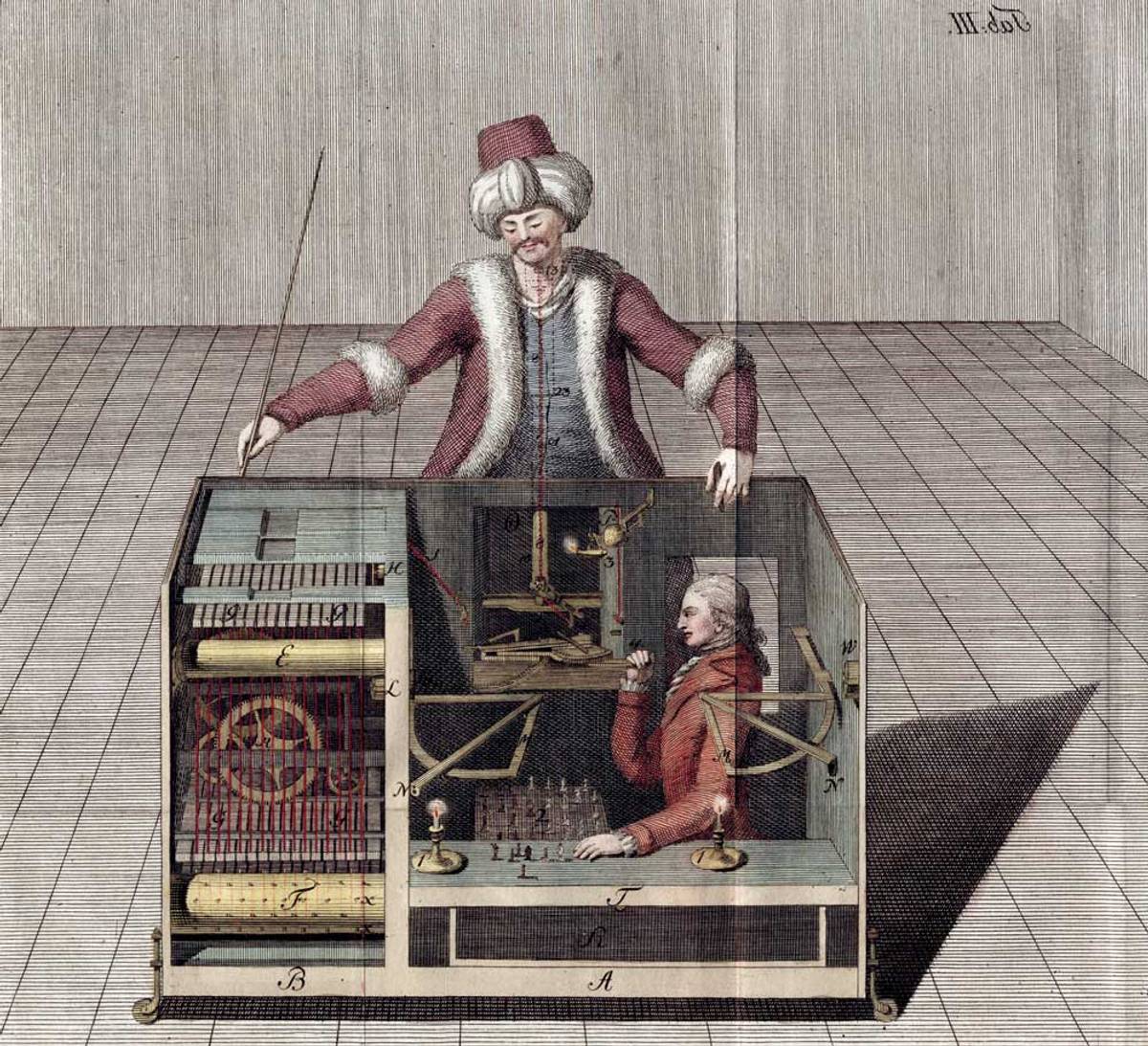

In this future, which actors like Silverman and Drescher correctly recognize as a looming reality, every good or service you buy or job you work might require you to contribute data and relinquish all claims to it. Individuals whose jobs are not fully outsourced to the AIs they helped train will be turned into “Mechanical Turks.” The original Mechanical Turk was a chess machine built in the 18th century and an elaborate ruse built to hide human agency behind technological artifice. Touted as a fully automated robot, the machine’s chess-playing ability was the result of a hidden human operator. More recently, Amazon adopted the name “Mechanical Turk” for its “crowdsourcing marketplace” that uses remote workers to perform tasks, including the training of AIs. Today, the term “Turk” also refers to human workers who provide data that is used to train or fix the outputs generated by AIs.

What would a “Turking” economy look like? Here’s one potential scenario: Employees at a large firm are forced to contribute data on everything they do that’s classified as work, whether it takes place in the office or, for remote workers, in their homes. Video. Text. Voice. Metadata gathered from sensors monitoring their peak working hours, heart rate, and other biomarkers, and frequency of bathroom breaks. Workers who refuse those terms discover that, with AIs already being trained to automate their entire industry, the few alternative jobs available would be even worse. With the employees now brought in line with their new “workplace,” the information collected from them is used to train AIs that will replicate the skills and even mimic the workers’ mannerisms, for instance by incorporating vocal inflections and facial expressions into customer service bots programmed to display “human” emotions. A human worker might then be paid, at a reduced rate, to provide feedback to their new AI replacement, in order to perfect its performance or fill in when it falls short.

Luckily, this scenario is not inevitable. One model of an effective response is being provided by the Hollywood strikers. To protect their work, the screenwriters and actors guilds issued a set of demands.

In short, the guilds’ demands offer a strategy for preserving the value of human work in the age of AI. The same pressures affecting writers and actors are going to sweep over virtually every other kind of work. These are the types of protections we should all be seeking, and we should be seeking them now. Soon it will be too late.

Wikipedia

AI presents an immense problem for people concerned about the future of free societies, but there is at least one simple solution available—data ownership rights for everyone. Every individual should have an ownership stake in the data they produce, regardless of where it is stored or how it is captured. Data generated from writing (posts, tweets, essays, etc.), images (whether drawings or photos posted to sites like Instagram), sounds (from music to voice), and video should belong to the human beings who create them.

The first step is to mandate that companies secure the positive consent of the person whose data they are scraping and harvesting from the web. This will require some changes to the internet’s current infrastructure, but tracing data back to their originator may be easier than many people imagine, given that big tech companies already employ pervasive surveillance systems to track their users every keystroke. Any AI system developed before the new requirement was put in place or where it isn’t possible to acquire consent, must be released as an open-source product.

In a system based on ownership instead of extraction and rent-seeking, individuals can pool their data for licensing in exchange for a royalty. This works well for big sites already aggregating vast pools of data. Standardized royalty rates for data contributions, based on the value generated by the AI, would minimize the abuse of employees unable to negotiate market rates. Royalties would incentivize the development of a dynamic market for data and open the web again, reversing the damage currently underway.

You won’t really own anything but you’ll get the privilege of squatting in the metaverse.

The proposal is less radical than it seems at first glance. Your data is already valuable. But now the only people who see the benefits of that value are the tiny number of corporations in the data harvesting business. To prevent the total obliteration of the middle class and preserve the possibility for America to be a free and democratic country, the necessary next step is to let individuals in on the profits generated by their labor.

In time, a data ownership system could foster the development of an industry akin to what we see in the financial world. Data brokers and banks would work for individuals with a fiduciary duty to get the best returns and protections possible. As AIs improve, and are folded into every product and service, this royalty payment should become substantial over time.

Rather than granting individuals ownership of their data, some people have argued that a better solution would be to nationalize AI, treating it as something like a public utility. We see many problems with this approach, but to cite only the most obvious: The government is already involved in AI and has used it not to promote the common good but as a political weapon. A co-author of this article addressed that dynamic earlier this year in testimony given before the U.S. Senate: “We seem to be caught in a trap. There is a vital national interest to promote the advancement of AI. Yet, at present, the government’s primary use of AI has been as a political weapon to censor information that it or its third-party partners deem harmful.”

There is no panacea that can prevent the inevitable volatility and harsh tradeoffs that AI will bring. But in moments of dramatic uncertainty, it is helpful to consider the lessons of the past. Between a future that resembles feudal collectivism and one that emulates the early liberal model of property rights, we believe the latter will be better both for the vast majority of individuals and for the social fabric of American society. Making people owners of the data they create would improve privacy protections without sacrificing scientific and technological innovation. Some individuals would still choose to hide or anonymize their presence online. But many others would be financially rewarded for bundling and packaging their data in useful forms that would make it more reliable and increase its value. More and better data being available would in turn create a highly competitive AI industry, with a large number of new entrants. This would lower the costs of AI services across the board, and drive up the royalties provided to data owners.

Participatory equity through data ownership in the growing AI-fueled economy is a judo move that would change the dynamic of our society for the better. We would flip from seeing the future and technological innovation as a threat to an opportunity for advancement in which we all have a stake.

John Robb is the editor of the Global Guerrillas Report on Substack and Patreon. John flew tier-one special operations for the United States military, founded numerous tech companies, pioneered social networking, and is a well-known military and technology strategist.

Jacob Siegel is Senior Editor of News and The Scroll, Tablet’s daily afternoon news digest, which you can subscribe to here.